Deep Learning, Computer Vision, and the algorithms that are shaping the future of Artificial Intelligence.

Friday, December 29, 2006

running in patchogue

When I come home for the holidays I like to go running -- unfortunately it is rather dark around suburbia at night time. As opposed to Shadyside, you probably won't encounter any other joggers. Are Long Islanders just anti-running? Here is a link for my Patchogue jogging path.

Wednesday, December 20, 2006

semester complete

This past semester I've learned quite a few things. From experience I've learned that TAN trees (Tree Augmented Naive Bayes) is not really better than the Naive Bayes Classifier. I've also learned that if you have lots of training data, then simple things like the nearest neighbour classifier work really well.

I learned a lot of new material from Carlos Guestrin's Graphical Models class. Unlike other classes in the past, this one really did present me with a plethora of new material. Junction Trees (aka Clique Trees) are really cool for exact inference. It was also really nice to see approximate inference algorithms such as loopy belief propagation and generalized belief propagation in action. Overall I think I'll be able to apply some concepts from Conditional Random Fields and approximate inference into my own research in object recognition.

I also finished my Teaching Assistant requirement. I'm still amazed at the high quality of Martial Hebert's Computer Vision course -- the students that take that class are almost ready to start producing research papers in the field. If there is one thing that sticks out from that course is how a large number of seemingly distinct problems get linearized and formulated as eigenvalue problems.

On another, not I started reading Snow Crash by Neal Stephenson. Pretty standard cyberpunk/hacker literature -- reminds me of World of Warcraft even though I've never played the game. I like it very much so far -- I should be done in a couple of days.

I learned a lot of new material from Carlos Guestrin's Graphical Models class. Unlike other classes in the past, this one really did present me with a plethora of new material. Junction Trees (aka Clique Trees) are really cool for exact inference. It was also really nice to see approximate inference algorithms such as loopy belief propagation and generalized belief propagation in action. Overall I think I'll be able to apply some concepts from Conditional Random Fields and approximate inference into my own research in object recognition.

I also finished my Teaching Assistant requirement. I'm still amazed at the high quality of Martial Hebert's Computer Vision course -- the students that take that class are almost ready to start producing research papers in the field. If there is one thing that sticks out from that course is how a large number of seemingly distinct problems get linearized and formulated as eigenvalue problems.

On another, not I started reading Snow Crash by Neal Stephenson. Pretty standard cyberpunk/hacker literature -- reminds me of World of Warcraft even though I've never played the game. I like it very much so far -- I should be done in a couple of days.

Wednesday, December 06, 2006

CVPR 2007: submitted!

Recently I submitted my first research paper to CVPR 2007. This is my first research paper where I'm first author (I had one from RPI where I was the 2nd author). Unfortunately I can't put a link up since it has to go through anonymous reviews.

On another note, the next conference I'm aiming for is ICCV in Brazil!

On another note, the next conference I'm aiming for is ICCV in Brazil!

Tuesday, November 07, 2006

segmentation as inference in a graphical model

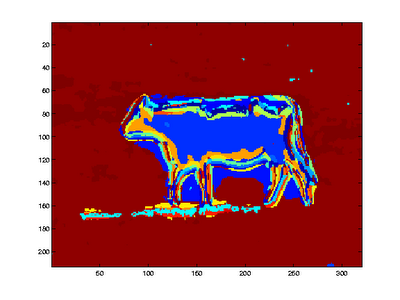

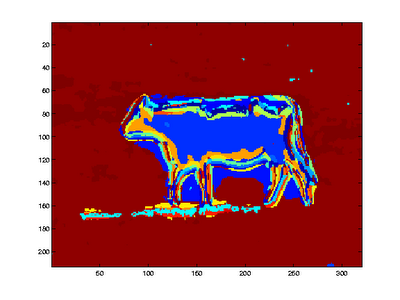

Recently, I've been playing with the idea of obtaining an image segmentation by inference on a random field. For a Probabilistic Graphical Models final class project, my teammate and I have been using Conditional Random Fields for segmentation. By posing segmentation as a superpixel labelling problem and placing a random field structure over the class posterior distribution, we were able to obtain cool looking segmentations.

On another note, did you know that logistic regression can be viewed as a conditional random field with one output variable? Once you see this, then you'll never forget why logistic regression looks the way it does. Maybe you should read this really cool CRF tutorial.

On another note, did you know that logistic regression can be viewed as a conditional random field with one output variable? Once you see this, then you'll never forget why logistic regression looks the way it does. Maybe you should read this really cool CRF tutorial.

Wednesday, October 25, 2006

are you a frequentist? (Bayesianism vs Frequentism)

The answer is either a crisp yes (if you are one of those), or a fuzzy probably-not. As Carlos pointed out in Graphical Models class today, if you are a bayesianist then you would attribute a probability in (0,1) to you being a bayesianist.

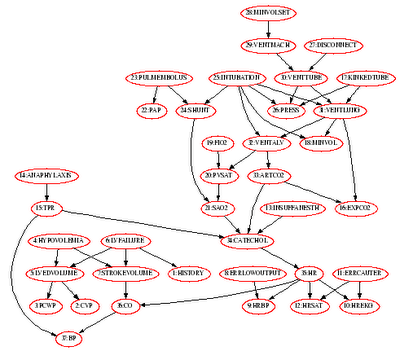

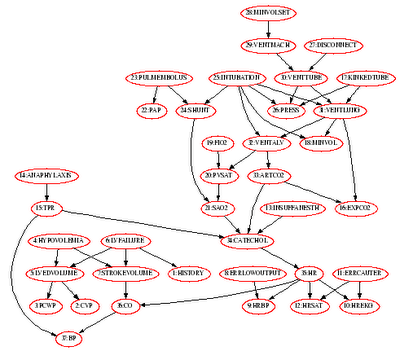

On another note, I'm finishing up yet another excruciating Probabilistic Graphical Models homework. This time, we had to implement variable elimination for exact inference in bayesian networks. Additionally, we implemented the min-fill heuristic to find a good variable elimination ordering. The 37 node alarm network is depicted above (graphviz baby!). I wonder if my variable elimination code is good enough to work on 1000 node networks. Only one way to find out. :-)

On another note, I've been practicing Milonga del Angel by Astor Piazzolla on my classical guitar.

On another note, I'm finishing up yet another excruciating Probabilistic Graphical Models homework. This time, we had to implement variable elimination for exact inference in bayesian networks. Additionally, we implemented the min-fill heuristic to find a good variable elimination ordering. The 37 node alarm network is depicted above (graphviz baby!). I wonder if my variable elimination code is good enough to work on 1000 node networks. Only one way to find out. :-)

On another note, I've been practicing Milonga del Angel by Astor Piazzolla on my classical guitar.

Wednesday, September 27, 2006

First half-lecture in front of a class

Yesterday I taught my first half-lecture for Martial's Computer Vision class. He was out of town, and we (the TAs) had to go over some new class material and go over the last/current homeworks. I think it went well.

Monday, September 18, 2006

textonify: texture classification with filters

A really good resource for vision researchers interested in texture-based classification is the Visual Geometry Group Texture Classification With Filters page.

For your enjoyment, here is a textonmap image:

For your enjoyment, here is a textonmap image:

Subscribe to:

Posts (Atom)